Exploring E-Commerce

and Entertainment(VOD) Solutions

-

A Deep Dive into the Benefits of Headless E-Commerce

Read more: A Deep Dive into the Benefits of Headless E-CommerceBusinesses always want to find new ways to improve their websites and give customers a great online shopping experience. A popular strategy is called headless e-commerce. In the past, online stores used one system closely connected between the front and back parts. Shopping online without a plan…

-

Top 7 Best Audio Streaming Platforms For 2024

Read more: Top 7 Best Audio Streaming Platforms For 2024Before delving deep into exploring various and best audio streaming platforms solutions for 2024, obtaining a comprehensive overview of the global music streaming service market is crucial. This overview should include insights into key growth drivers, challenges, trends, and opportunities. Businesses can leverage dynamic analysis to formulate…

-

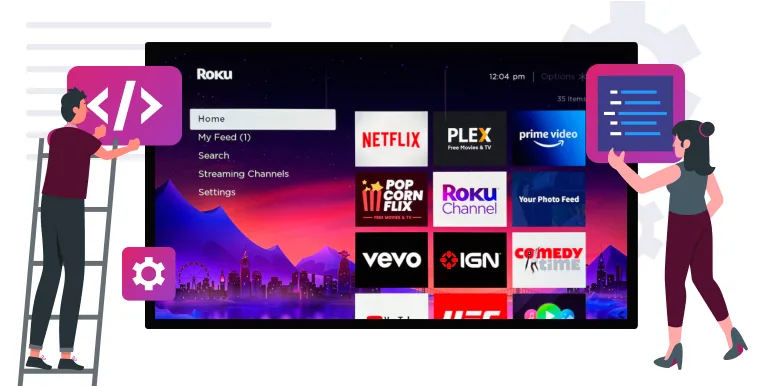

How To Build A Roku TV App & Guide On Roku App Development In 2024

Read more: How To Build A Roku TV App & Guide On Roku App Development In 2024Cord-cutters have significantly reshaped the landscape of streaming videos, including movies and TV shows, with Roku emerging as the leading platform for enjoying diverse media content. The inception of Roku in 2002 in Los Gatos, California marked the beginning of a transformative era in home entertainment. From…

-

What Is Video Streaming Services? Definition From Webnexs

Read more: What Is Video Streaming Services? Definition From WebnexsIn 2023, video streaming services take over traditional cable TV, with more people opting for streaming services because of their convenience, affordability, and flexibility. With so many options available, it’s overwhelming to choose the best one for your needs. To help you make an informed decision, we’ve…

-

Top 5 Best Muvi Alternatives For Your Video Business

Read more: Top 5 Best Muvi Alternatives For Your Video BusinessThis blog focuses on the surging popularity of Muvi, a robust video monetization platform. However, when content creators decide on a platform for their video enterprise, making an informed choice is vital. Hence, it becomes essential to explore the notable competitors of this platform to make an…

-

What is VOD? What Does Video On Demand stands & it Meaning?

Read more: What is VOD? What Does Video On Demand stands & it Meaning?Video on Demand has emerged as the present and future of the broadcasting industry, revolutionizing the way content is consumed. A compelling report by Conviva indicates a significant surge in VOD viewership, with a year-by-year increase of 155%. On average, viewers spend approximately 17.1 minutes per session…

-

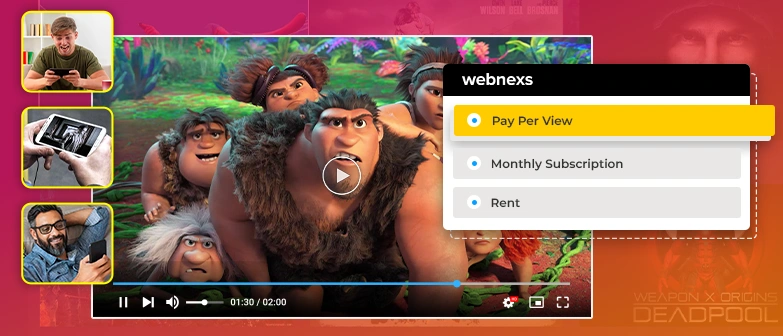

What is PPV? How Does Pay Per View Work in Streaming

Read more: What is PPV? How Does Pay Per View Work in StreamingWhen discussing the monetization of videos, what immediately comes to mind? Most likely, you would assume that in-stream ads are the sole method to boost your earnings. However, that’s not the only option. This emphasizes the importance of exploring other techniques for video monetization, such as what…

-

Build an Ecommerce Marketplace Website like Amazon in 8 Simple Steps

Read more: Build an Ecommerce Marketplace Website like Amazon in 8 Simple StepsAre you ready to create your online marketplace website and be as successful as Amazon? Look no further! In our comprehensive guide, we provide 8 simple steps to help you build an ecommerce website like Amazon. Dive in and unlock the secrets to make a website like…

-

Ecommerce Marketplace Seller Onboarding Process In 2024

Read more: Ecommerce Marketplace Seller Onboarding Process In 2024Struggling to streamline your ecommerce marketplace seller onboarding process? Look no further! In this article, we’ll explore the Pain, Agitate, and Solve (PAS) framework to address the common challenges faced by sellers during onboarding. From lengthy procedures to confusing documentation requirements, we’ll delve into the frustrations that…

-

The Ultimate Guide to Start An OTT Platforms in 5 Simple Steps

Read more: The Ultimate Guide to Start An OTT Platforms in 5 Simple StepsAre you looking to start OTT platform? Here’s a brief guide on how to build your OTT platform using cutting-edge technologies to efficiently manage and deliver your video content across mobile devices, smart TVs, and the web. OTT has revolutionized the way we consume media content. Audiences…